Deep Fake – The Greatest Threat to the Idea of Truth

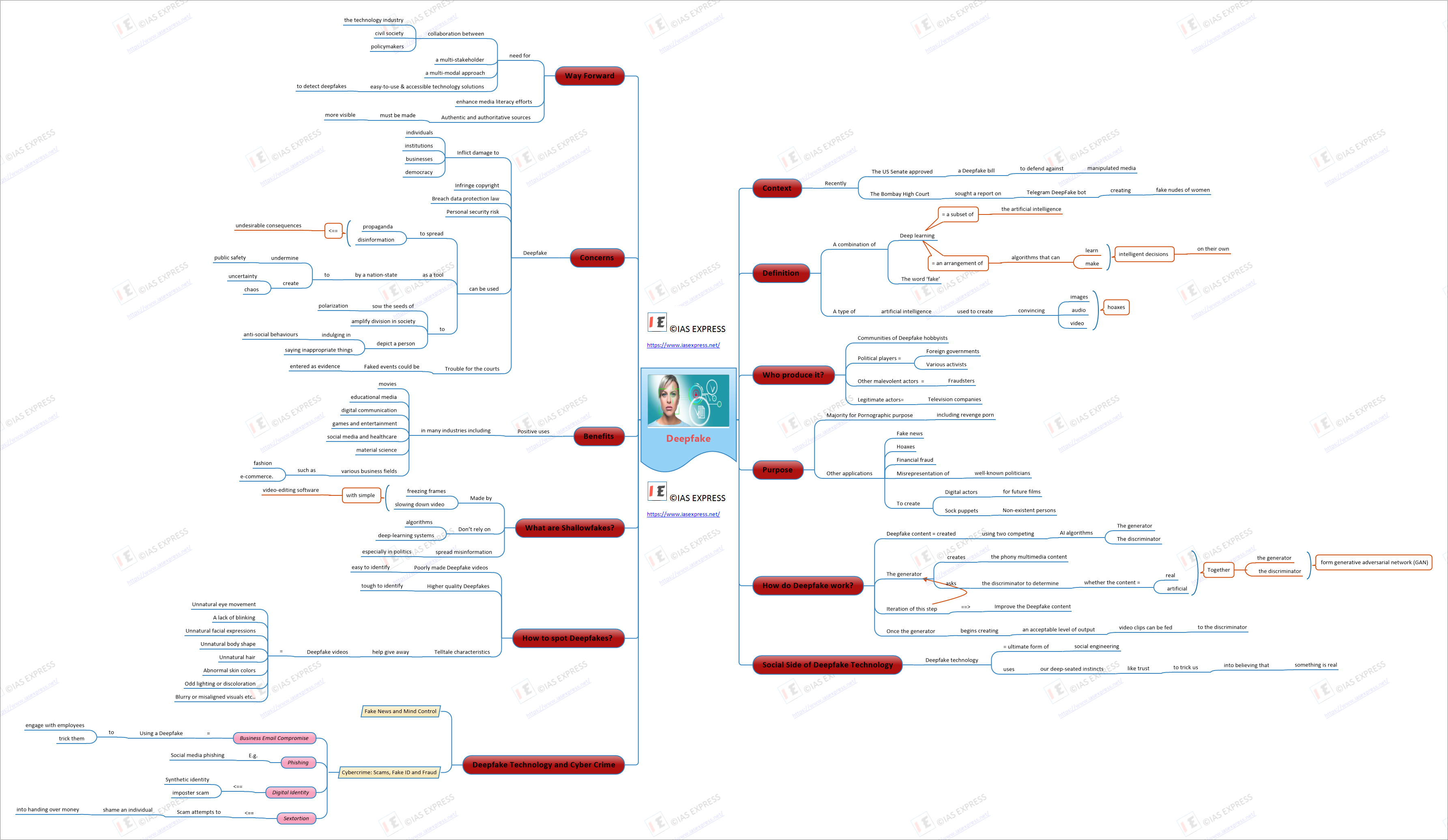

Recent advancements in artificial intelligence (AI) and cloud computing technologies have led to rapid development in the sophistication of audio, video, and image manipulation techniques. This synthetic media content is commonly referred to as “deepfakes.” Deepfakes are one of the serious threat of our times. The word ‘deepfake’ is a combination of deep learning (a subset of the artificial intelligence) and the word ‘fake’. The result of the increase in deepfakes has caused across the globe in damaging and sinister ways. Recently, the US Senate has approved a deepfake bill to defend against manipulated media and the proposed legislation calls for research to detect synthetic shams online. Also, the Bombay High Court recently has sought a report on Telegram DeepFake bot creating fake nudes of women. In this context, let’s make a detailed analysis of Deepfake.

This topic of “Deep Fake – The Greatest Threat to the Idea of Truth” is important from the perspective of the UPSC IAS Examination, which falls under General Studies Portion.

What is meant by Deepfake?

- Deepfake (or deep fake) is a type of artificial intelligence used to create convincing images, audio and video hoaxes.

- As mentioned before, the term, which describes both the technology and the resulting bogus content, is a portmanteau of deep learning and fake.

- Deep learning is “a subset of AI,” and refers to an arrangement of algorithms that can learn and make intelligent decisions on their own.

- They become more dangerous when people choose to use the technology for nefarious purposes as the technology can be used to make people believe something is real when it is not.

- They could be used to spread false information from an otherwise trusted source, for example, with election propaganda. For example, by creating a copy of a public person’s voice or superimposing one person’s face on another person’s body.

- This technology can serve a serious threat to the modern life.

Who Produces Deepfakes?

- There are at least four major types of deepfake producers:

- Communities of deepfake hobbyists

- Political players such as foreign governments, and various activists

- Other malevolent actors such as fraudsters

- Legitimate actors, such as television companies

What are they for?

- Many are pornographic. The AI firm Deeptrace found 15,000 deepfake videos online in September 2019, a near doubling over nine months. A staggering 96% were pornographic and 99% of those mapped faces from female celebrities on to porn stars.

- As new techniques allow unskilled people to make deepfakes with a handful of photos, fake videos are likely to spread beyond the celebrity world to fuel revenge porn.

- Deepfake have garnered widespread attention for their uses in creating fake news, hoaxes, and financial fraud

- Deepfake have been used to misrepresent well-known politicians in videos.

- There has been speculation about deepfakes being used for creating digital actors for future films. Digitally constructed/altered humans have already been used in films before, and deepfakes could contribute new developments in the near future.

- Deepfake have begun to see use in popular social media platforms, which can be manipulated in many ways and mislead the society in various ways.

- Deepfake photographs can be used to create sock puppets, non-existent persons, who are active both online and in traditional media.

How do Deepfake work?

- Deepfake content is created by using two competing AI algorithms – one is called the generator and the other is called the discriminator.

- The generator, which creates the phony multimedia content, asks the discriminator to determine whether the content is real or artificial.

- Together, the generator and discriminator form something called a generative adversarial network (GAN).

- Each time the discriminator accurately identifies content as being fabricated, it provides the generator with valuable information about how to improve the next deepfake.

- The first step in establishing a GAN is to identify the desired output and create a training dataset for the generator.

- Once the generator begins creating an acceptable level of output, video clips can be fed to the discriminator.

- As the generator gets better at creating fake video clips, the discriminator gets better at spotting them. Conversely, as the discriminator gets better at spotting fake video, the generator gets better at creating them.

The Social Side of Deepfake Technology

- Deepfake technology is perhaps the ultimate form of social engineering. It uses our deep-seated instincts, like trust, to trick us into believing that something is real.

- The manipulation of trust is one of the reasons for the success of deepfakes as the fakes are based on the use of real voice or video of people.

- The original purpose of deepfake technology was not to cause harm. On the contrary, deepfakes were designed as a tool to manipulate videos for fun rather than malice.

- Unfortunately, the technology quickly took on a new ‘face’ later.

Deepfake Technology and Cyber Crime

- The areas where deepfake technology is being used for nefarious reasons includes the following:

Fake News and Mind Control

- Deepfakes can be real bullies. Their use to create ‘fake news’ is a natural home for the deepfake.

- Deepfake news was a serious concern for the U.S. 2020 election.

- The example of fake Barack Obama released to demonstrate how dangerous the technology could be in terms of mass propaganda. It shows Barack Obama saying salacious and inflammatory things.

- The federal bill “Malicious Deep Fake Prohibition Act of 2018” was enacted in 2018 to help mitigate this.

Cybercrime: Scams, Fake ID and Fraud

- One of the areas that deepfakes are likely to excel in is cybercrime. The following are four key areas that will likely be enhanced by deepfake technology:

1. Business Email Compromise (BEC)

- Social engineering is a key tactic in this scam.

- Using a deepfake to engage with employees and trick them will mean that an organization will need to be even more vigilant in terms of business processes.

2. Phishing

- The use of deepfakes in phishing campaigns would make them more difficult for the individual to detect as a scam.

- For example, in social media phishing, a faked video of a celebrity could be used to extort money from unwitting victim.

3. Digital Identity

- Synthetic identity and imposter scams are increasingly used in cybercrime.

- Imposter scams are the most frequent complaint made to the Federal Trade Commission (FTC).

- If digital identity systems use verification that requires facial recognition, deepfakes could potentially be used to create fraudulent accounts.

4. Sextortion

- This scam attempts to shame an individual into handing over money.

- The scam usually involves a threat to show a video of the recipient in a compromising position.

- Sextortion could become made more sinister if a deepfake video of the recipient was used. High-worth individuals may become the first victims.

How to spot Deepfakes?

- Poorly made deepfake videos may be easy to identify, but higher quality deepfakes can be tough.

- Continued advances in technology make detection more difficult.

- Certain telltale characteristics can help give away deepfake videos, including these:

- Unnatural eye movement.

- A lack of blinking.

- Unnatural facial expressions.

- Facial morphing — a simple stitch of one image over another.

- Unnatural body shape.

- Unnatural hair.

- Abnormal skin colors.

- Awkward head and body positioning.

- Inconsistent head positions.

- Odd lighting or discoloration.

- Bad lip-syncing.

- Robotic-sounding voices.

- Digital background noise.

- Blurry or misaligned visuals.

- Researchers are developing technology that can help identify deepfakes.

- Many researchers at the University are using machine learning that looks at soft biometrics such as how a person speaks along with facial quirks. Detection has been successful 92 to 96 percent of the time.

- Organizations also are incentivizing solutions for deepfake detection.

What are Shallowfakes?

- Shallowfakes are made by freezing frames and slowing down video with simple video-editing software.

- They don’t rely on algorithms or deep-learning systems.

- Shallowfakes can spread misinformation, especially in politics.

What are the benefits of Deepfake technology?

- Apart from the many concerns, Deepfake technology also has positive uses in many industries., including movies, educational media, and digital communication, games and entertainment, social media and healthcare, material science, and various business fields, such as fashion and e-commerce.

- For example, the film industry can benefit from deepfake technology in multiple ways, such as, it can help in making digital voices of actors who lost theirs due to disease, or for updating film footage instead of shooting it again.

What are the concerns associated with Deepfake?

- Deepfakes can inflict damage to individuals, institutions, businesses and democracy.

- Deepfakes are being used to spread propaganda and disinformation with ease and unprecedented speed and scale. Such disinformation and hoaxes can have undesirable consequences.

- They are not illegal per se, but depending on the content, a deepfake may infringe copyright, breach data protection law.

- Deepfakes can depict a person indulging in anti-social behaviours or saying inappropriate things.

- Deepfakes can also pose a personal security risk, it can mimic biometric data, and can potentially trick systems that rely on face or voice recognition.

- Deepfakes can be used to sow the seeds of polarisation and amplifying division in society.

- Deepfakes could also mean trouble for the courts, particularly in child custody battles and employment tribunals, where faked events could be entered as evidence.

- A deepfake could be used as a tool by a nation-state to undermine public safety and create uncertainty and chaos in the target country and thereby undermining the democracy.

What is being done to combat Deepfakes?

- Some of the way by which various governments and companies trying to detect, combat, and protect against deepfakes includes the following:

Social media rules

- Social media platforms like Twitter have policies that outlaw deepfake technology.

- Twitter’s policies involve tagging any deepfake videos that aren’t removed.

- YouTube has banned deepfake videos related to the 2020 U.S. Census, as well as election and voting procedures.

Research lab technologies

- Research labs are using watermarks and blockchain technologies in an effort to detect deepfake technology, but technology designed to outsmart deepfake detectors is constantly evolving.

Filtering programs.

- Programs like Deeptrace are helping to provide protection.

- Deeptrace is a combination of antivirus and spam filters that monitor incoming media and quarantine suspicious content.

Corporate best practices

- Companies are preparing themselves with consistent communication structures and distribution plans.

- The planning includes implementing centralized monitoring and reporting, along with effective detection practices.

Legislations

- While the U.S. government is making efforts to combat nefarious uses of deepfake technology with bills that are pending, three states have taken their own steps.

- Virginia was the first state to impose criminal penalties on non-consensual deepfake pornography.

- Texas was the first state to prohibit deepfake videos designed to sway political elections.

- California passed two laws that allow victims of deepfake videos — either pornographic or related to elections — to sue for damages.

What needs to be done?

- The people must take the responsibility to be a critical consumer of media on the Internet and think and evaluate the authenticity of a message before sharing it on social media.

- There is a need for collaboration between the technology industry, civil society, and policymakers. These regulations must be aimed at disincentivizing the creation and distribution of malicious deepfakes.

- Authentic and authoritative sources must be made more visible to help inform people, which will help negate the effects of false news.

- There is the need for a multi-stakeholder and multi-modal approach.

- Media literacy for consumers and journalists is the most effective tool to combat disinformation and deep fakes.

- Media literacy efforts must be enhanced to cultivate an alert public, which can lessen the damage posed by fake news.

- There is a need for easy-to-use and accessible technology solutions to detect deepfakes.

- Governments, universities and tech firms are all funding research to detect deepfakes. Amplifying authoritative sources.

Conclusion

- Deepfakes will be the most dangerous crime in the future.

- Work is ongoing to mitigate the threat of malicious deepfakes.

- We need to be aware of the deepfake threat, not only in terms of fake news but also as a sinister tool in the arsenal of cyber criminals.

Practice Question for Mains

- Deepfakes offer an opportunity to the cyber criminal and a challenge to everyone else. Discuss. (250 Words)